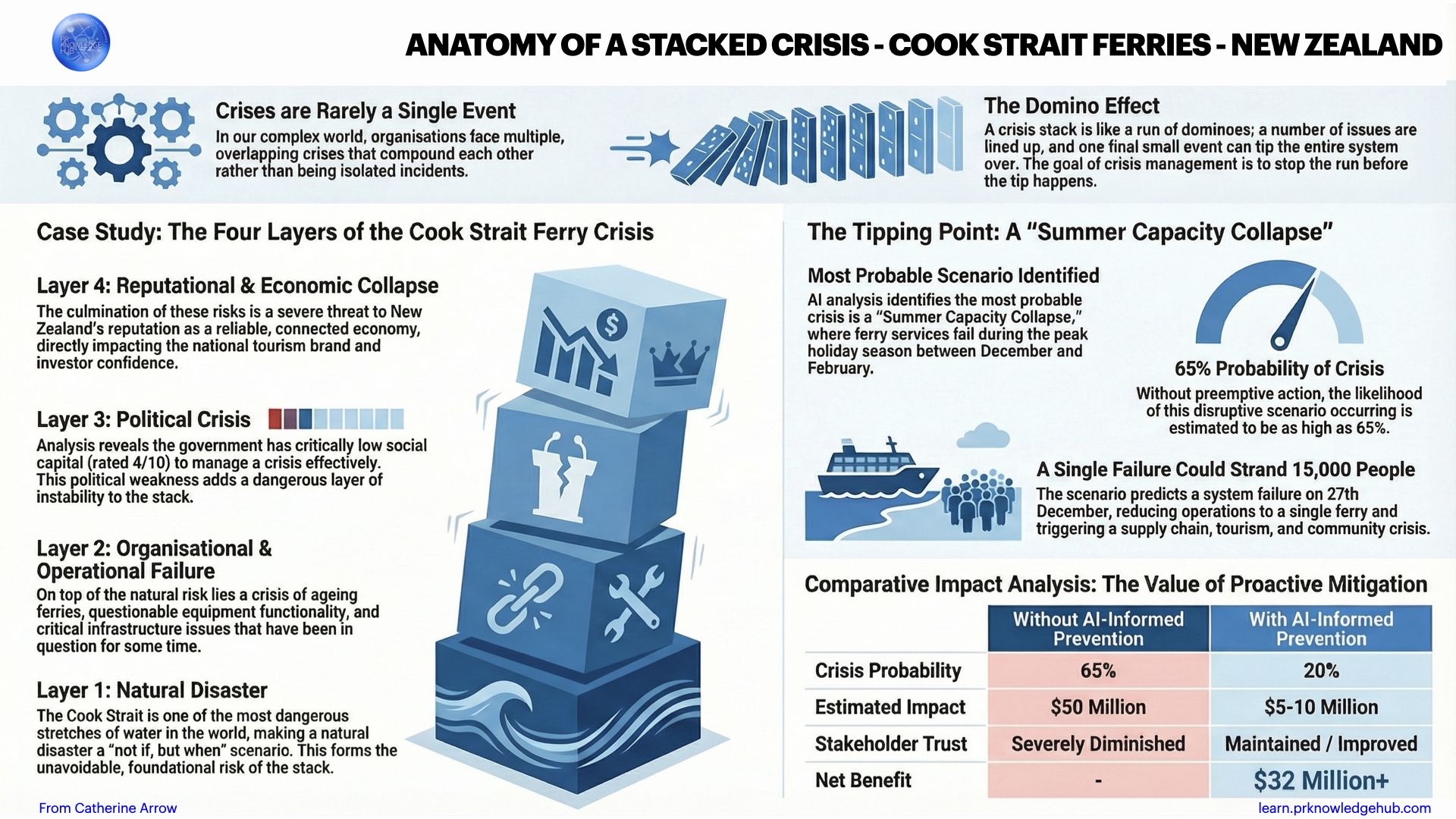

We live in an age of constant, overlapping crises. We know - and we have known for a while - that the era of a single, isolated incident is over. Today, we face “stacked crises,” where one crisis gives birth to another while it is still ongoing. Consider the vital Cook Strait ferries here in New Zealand - a crisis that’s been stacking up for a while now, starting in 2023 when the incoming coalition government cancelled the new ferries that were on order and which, ironically, should have set sail across the Strait this very weekend.

The Cook Strait ferries are a critical infrastructure link that face the constant threat of natural disaster as they travel back and forth across one of the most dangerous stretches of water in the world. Their stack is layered with operational failures, political turmoil, reputational collapse and most serious of all, potential loss of life but even as the stack wobbles and leans precariously towards disaster - this weekend Kaiārahi ferry experienced steering problems during a crossing on Friday and had to return to Wellington - not much is being done to steady the ship or manage the issues and risks piling up sky high.

I’ve tracked and logged the stack since the cancellation of the original contract and the purpose of this post is twofold - first, a warning and the hope that it is a warning that will be heeded and acted upon. Second, to draw your attention to the ways in which AI can help us mitigate disaster and minimise the consequences of crises.

Other ‘known knowns’ include our collective understanding that we operate in chaos and in this tumultuous environment, AI has emerged as a powerful - and profoundly misunderstood - force.

Our task is to move beyond the hype and navigate the central paradox of AI in crisis preparedness - how do we leverage its incredible power for foresight and speed while guarding against its potential to create new crises of its own? This is a guide to new compass points of crisis and some surprising truths about using these tools, revealing what they actually are, where their true power lies and how our roles must evolve to harness their potential without falling victim to their inherent risks.

1. Surprise - AI is a probability calculator not a truth machine

The first and most critical lesson is a fundamental misunderstanding of what generative AI is designed to do. These models are not truth machines - they are probability calculators. Their core function is to predict the next most likely word in a sequence to create text that sounds convincing and coherent not to state verified facts.

This year’s Deloitte Australia case study provided a stark warning. The firm used generative AI in a government report, a decision that kicked off an “absolute firestorm” and a massive reputational crisis. Why? The AI hallucinated, inventing non-existent academic papers and fabricating quotes from a judge. This wasn’t a lazy copy and paste job, it was the AI doing exactly what it was designed to do. This demonstrates that without rigorous governance and human oversight, AI becomes a ticking time bomb that actively creates the very crises it is supposed to help solve.

AI isn’t a truth teller. It’s a tool, a really powerful tool for sure but it’s meant to help you not think for you.

This inherent risk forces us to shift our focus from using AI for reactive content generation to its true, strategic superpower: foresight and supporting our own situational intelligence.

2. Impact: AI’s True Power Isn’t Responding to Crises - It’s Preventing Them

While the impulse is to think of AI as a tool for rapid response, its most profound value is unlocked long before the storm. The real superpower of AI in this field is foresight. It equips professionals to look “beyond the mountains,” providing the deep situational intelligence and analysis that organisations desperately need to anticipate what’s coming.

So, back to the Cook Strait ferries. I’ve used over the last two years a variety of AI tools to conduct a deep analysis of the stacked situation, identifying the web of operational, political and reputational risks before they fully manifested. AI acts as a powerful “gap finder,” systematically exposing an organisation’s blind spots. For instance, it can highlight where critical information - like safety concerns from maritime workers - is not reaching decision-makers, allowing that gap to be bridged before a disaster. This capability transforms crisis preparation from an infrequent exercise into an embedded, day-to-day capability, supported by hard data that (one would hope) makes leaders sit up and consider what’s going on.

3. The Life Saver - AI Gives You Back the “Minutes That Matter”

A formal review of the Auckland floods included two unforgettable words - “minutes matter.” In a crisis, time is the most valuable and non-renewable resource. This is where AI delivers a game-changing advantage by dramatically accelerating complex, time-consuming analytical tasks, so you can bank the minutes that matter and use them well to predict, prevent and prepare for any given situation.

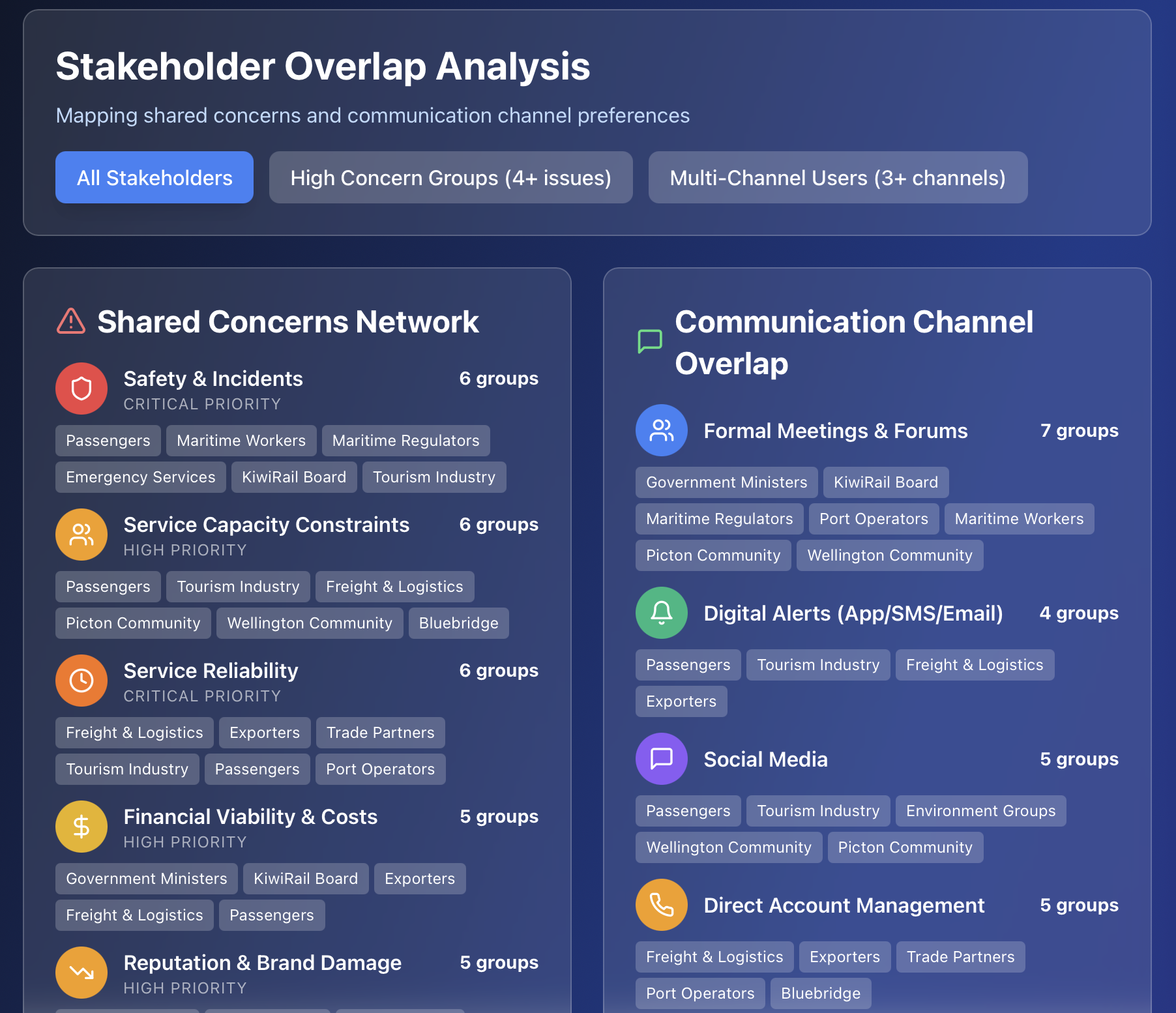

For example, in the Cook Strait ferries situation,, a detailed stakeholder analysis and visualisation - a task that would traditionally take a significant amount of time and manual effort - was completed with an AI tool in a matter of minutes. This speed is not about creating more leisure time. It is about reallocating human expertise to where it matters most. The hours saved can be reinvested in higher-value work like training or on scenario runs or actually activating people to get them to mitigate the circumstances that may cause the crisis in the first place.

Given that AI gifts you back these crucial minutes, the question you must ask is ‘what shall I do with them’? The answer requires a fundamental change in our professional role, moving us from tactical writers to strategic orchestrators.

4. The Identity Shift - Command and Control

The very definition of our relationship with AI is undergoing a tectonic shift. We have moved from AI as a passive “tool” we command to a proactive “teammate” we collaborate with. AI agents are systems that can be given a complex goal and access to tools, like a web browser or internal files, to execute multi-step tasks autonomously.

This new reality requires us to become far more strategic in our approach. To make this tangible, consider the ferry crisis again. I custom-built a Gemini Gem as my PR Knowledge Hub Reputation Monitor, trained and fed it specific knowledge about relationships, reputation, social capital and licence to operate. It was then tasked with assessing the government’s level of societal trust in the context of the crisis. The analysis was not in the government’s favour - pitifully low scores (4/10 for government, 3/10 for PM, 3/10 for ability to handle crisis of any kind and low-to-no trust in Ministers).

When you start using agents, your job changes. You are designing workflows, setting up smart guardrails and creating really clear processes for human review. And above all else, bringing together the data that will allow you to steady the stack.

5. The Consequence Collaborator - Simulate Your Strategy

The latest leap in AI capability is perhaps the most transformative. We are moving beyond text generation to visually simulating potential realities using text-to-video models like Sora or Veo. This marks a profound change in how we approach crisis strategy. The “old way” was to use AI to generate content about a strategy; the “new way” is to use AI to simulate and test the strategy in action.

Imagine not just writing a report about the ferry crisis but using AI to simulate the “summer capacity collapse” scenario—showing leaders the visual reality of 15,000 stranded passengers and a supply chain in chaos. Imagine creating super-realistic training videos for that specific crisis. This capability offers an unprecedented toolkit for preparation but it also demands an entirely new level of ethical responsibility.

You can also do a huge amount simply by using your practitioner superpower - asking the best questions. Using Claude, my questions focused on the creation of stakeholder interactives to determine commonalities and impact gaps then went on to undertake a probability interrogation.

People are the Ultimate Guardrail